This document provides instructions for Protein Prospector administrative tasks on both LINUX and Microsoft Windows platforms.

- Updating Protein/DNA Databases

- Modifying the main configuration file

- Parameters used by all versions of Protein Prospector

- The Sequence Database Directory

- The Upload Temporary Directory

- The Maximum Size of an Uploaded File

- The Path of the R Executable

- Whether Temporary Data File Used By R are Kept

- Whether the UCSF Banner Should Be Displayed

- Logging Parameters

- The Search Timeout in Seconds

- The Maximum Number of Sequences that can be Entered in MS-Product

- The Maximum Number of Peaks in a Single Spectrum for an MS-Fit Search

- The Maximum Reported Hits Limit for MS-Fit

- Allowing FA-Index to Use Large Databases on Computers With a Small Amount of RAM

- The Root Directory of the Viewer Repository

- The Root Directory of the Site Database Repository

- Parameters relevant only if the installation includes Batch-Tag searching

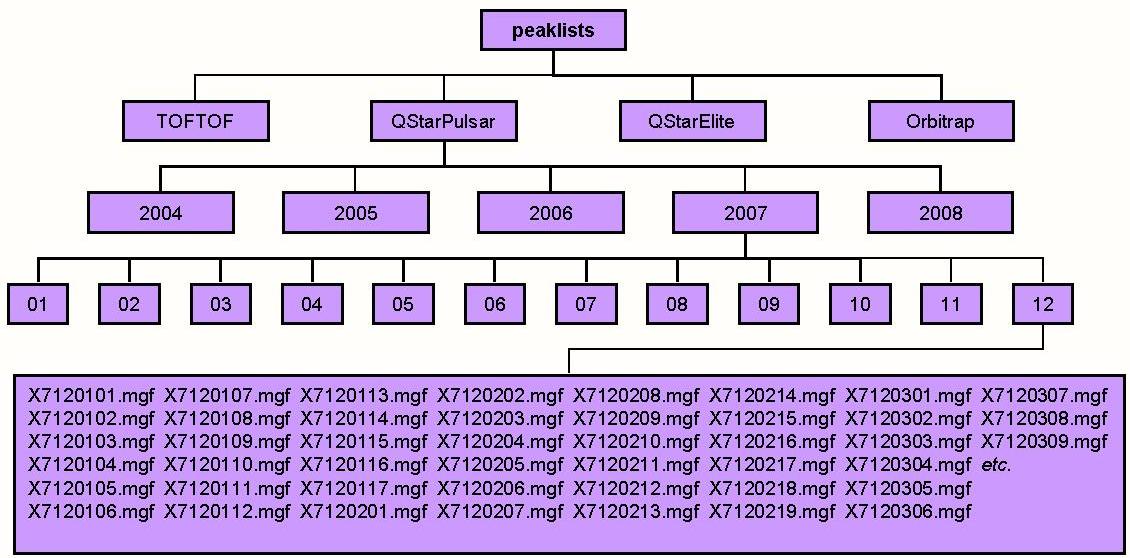

- The Root Directory of the Centroid Data File Repository

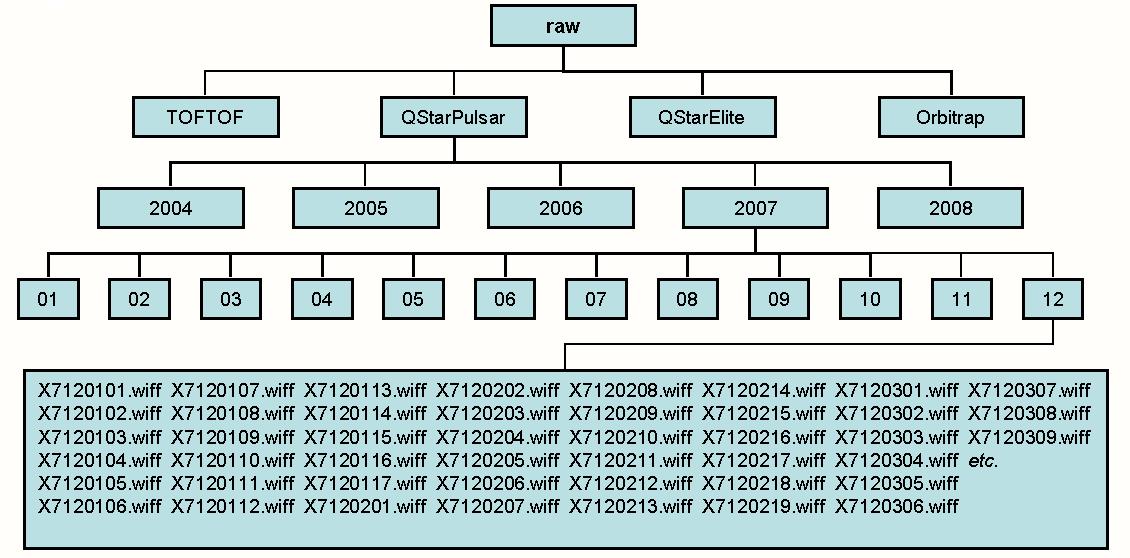

- The Root Directory of the Raw Data File Repository

- The Root Directory of the Repository for Uploaded Files

- Whether Multiple Processors are Used for Batch-Tag Searches

- The Maximum Number of MSMS Spectra in a Group in a Batch-Tag Search

- The Minimum Number of MSMS Spectra in a Group in a Batch-Tag Crosslink Search

- Whether to Duplicate Scans if the Charge isn't Specified in an Uploaded Centroided File

- The MPICH2 Run Executable (Windows Only)

- The Arguments Used When Running MPICH2 (Windows Only)

- The Minimum Password Length for the Batch-Tag Search Database

- The Batch-Tag Search Database Login Parameters

- The Batch-Tag Daemon Parameters

- Whether to Join Results Files

- Whether to Do the Expectation Value Search First

- Whether to Forward Request for Raw Data to a Different Server

- virtual_dir_proxy Parameter

- Raw Data Processing Parameters

- Modifying the Javascript file

- Changing the HTML link from the accession number in the search results

- Changing the HTML link from the UniProt IDs in the search results

- Changing the HTML link from the MS-Digest index number in the search results

- To Add/Change a Taxonomy Filter

- To Change Amino Acids Attributes

- To Add/Change Variable Amino Acid Modifications

- To Add/Change Constant Amino Acid Modifications

- To Add/Change the List of N-Terminus Modifications that can form a1 and b1 Ions

- To Add/Change Elements

- To Add/Change Enzyme Digest Rules

- To Add/Change Immonium Ion Recognition Rules

- To Change the Appearance of Graphs in the Package

- To Change the Protein Prospector Fragmentation Parameters

- To Change the Glycosylation B and Y ions considered

- To Change Additions to the List of Glycosylation Modifications

- To Change the Glycosylation Modification Building Blocks and Related Attributes

- To Change the Remote Database URL definitions that Enable HTML Links

- To Change the GlyTouCan IDs for given Glycosylation Modifications

- To Change the Protein Prospector Instrument Parameters

- To Change the Matrix Modification Definitions

- To Change the Computer Optimisation Parameters

- To Add/Change MS-Homology Score Matricies

- To Add/Change the List of Species for dbEST Prefix Databases

- To Add/Change the List of Databases Accessible by the autofaindex.pl Script

- To Add/Change Parameters Associated with Site Databases

- To Add/Change the Indicies Used by MS-Digest

- To Modify the Cross Linking Options in MS-Bridge/MS-Tag/Batch-Tag

- To Modify the Options on the MS-Bridge Link AAs menu

- To Add/Change the Quantitation Options

- To Add/Change the Purity Coefficients

- To Change the Link to the Unimod Web Site

- To Add/Change the MGF (Mascot Generic Format) Parameters

- To Modify the Distribution File

- To Modify the Data Repository Instrument File

- To Modify the Default Search Parameters

- To Modify the Parameters Used for the Expectation Value Search

- To Modify the Parameters for Calculating Expectation Values by the Linear Tail Fit Method and FDR Limits

- To Modify the Parameters Related to the Bond Score Max Option

- To Modify the Coefficients for Calculating Discriminant Scores

- Updating the taxonomy files

- Initializing the mySQL Database

- Backing Up the mySQL Database

- Restoring the mySQL Database

- Restoring the mySQL Database on Windows from Database mySQL Database Files

- Resetting the mySQL root password on Windows

- Resetting the mySQL prospector password on Windows

- Installing Batch-Tag Daemon

- Adding Additional MS-Viewer Automatic Conversion Scripts

- Mascot Converter - mascot_converter.pl

- X!Tandem Converter - tandem_converter.pl

- Modifying the Viewer Conversion Parameter File

- Adding Additional MS-Viewer MaxQuant SILAC Labelling Options

- Clearing the Cache When Updating Javascript Files

- LINUX Installation

- Download and Install

- Enable Cut and Paste

- Give Your Username Permission to Use sudo

- Configure the Network Capabilities

- Disabling Secure LINUX

- Setting Up Network Time Protcol

- Installing the Gnome Desktop

- Installing the Firefox Browser

- Install Required Packages

- Install Apache

- Compiling Prospector

- Create the Prospector Distribution

- Configure MPI

- Configure Apache

- Install and Configure mySQL

- Create the Sequence Database Directory and Add the SwissProt Database

- Create the Repository Directories

- Ensure that the Batch-Tag Daemon Starts when the System is Booted

- Start the Required Services

- Installing a Raw Daemon

- Example LINUX Installations

- Building the Windows Installers

Most of Protein Prospector's configuration files are in the directory. The files are all text files and must be edited with a text editor. Suitable programs are Notepad on a Windows platform or vi/emacs on a LINUX platform. You should not use a Word processor to edit the files.

A list of all the parameter files is shown below with a link to the relevant manual section.

Configuration files required by all versions of Protein Prospector:

- aa.txt

- acclinks.txt

- b1.txt

- bond_score.txt

- computer.txt

- dbEST.spl.txt

- dbhosts.txt

- dbstat_hist.par.txt

- distribution.txt

- elements.txt

- enzyme.txt

- enzyme_comb.txt

- expectation.txt

- fit_graph.par.txt

- fragmentation.txt

- glyco_by.txt

- glyco_cation.txt

- glyco_info.txt

- glycolinks.txt

- glytoucan_id.txt

- hist.par.txt

- homology.txt

- idxlinks.txt

- imm.txt

- indicies.txt

- info.txt

- instrument.txt

- link_aa.txt

- links.txt

- links_comb.txt

- mat_score.txt

- mgf.xml

- mq_silac_options.txt

- pr_graph.par.txt

- site_groups.txt

- site_groups_up.txt

- sp_graph.par.txt

- taxonomy.txt

- taxonomy_groups.txt

- unimod.txt

- uniprot_names.txt

- usermod_frequent.txt

- usermod_glyco.txt

- usermod_msproduct.txt

- usermod_quant.txt

- usermod_silac.txt

- usermod_xlink.txt

- viewer_conv.txt

- dbstat/default.xml

- msbridge/default.xml

- mscomp/default.xml

- msdigest/default.xml

- msfit/default.xml

- msfitupload/default.xml

- mshomology/default.xml

- msisotope/default.xml

- msnonspecific/default.xml

- mspattern/default.xml

- msproduct/default.xml

- msseq/default.xml

- mstag/default.xml

- taxonomy/citations.dmp

- taxonomy/delnodes.dmp

- taxonomy/division.dmp

- taxonomy/gc.prt

- taxonomy/gencode.dmp

- taxonomy/merged.dmp

- taxonomy/names.dmp

- taxonomy/nodes.dmp

- taxonomy/readme.txt

- taxonomy/speclist.txt

- taxonomy/taxonomy_cache.txt

Configuration files used by Batch-Tag/Search Compare:

- cr_graph.par.txt

- disc_score.txt

- disc_score2.txt

- error_hist.par.txt

- expectation.xml

- upidlinks.txt

- inst_dir.txt

- iTRAQ4plex.txt

- iTRAQ8plex.txt

- mmod_hist.par.txt

- quan.txt

- quan_msms.xml

- repository.xml

- batchtag/default.xml

- searchCompare/default.xml

One other file that you may need to modify is

-

Obtain FASTA formatted sequence database files for the seqdb directory (specified in the main configuration file):

Locations to download FASTA formatted database files via ftp:

- Genbank Production of this database as a single large file has been discontinued. Release 166 (June 2008) was the last release. The database download file (rel166.fsa_aa.gz) could still be found by google at the time of writing (Feb 2009). Individual protein FASTA files are now provided on a per-division basis with names like gbXXXX.fsa_aa.gz where XXXX is the division.

uniprot_sprot.fasta uniprot_trembl.fasta Combined # entries (3.2.2009) 408,099 # entries (3.2.2009) 7,001,017 # entries (3.2.2009) 7,409,116 Size in Bytes Size in Bytes Size in Bytes Downloaded File (.gz) 63,686,261 Downloaded File (.gz) 1,347,048,256 Uncompressed Database File 193,201,640 Uncompressed Database File 3,113,770,239 Combined Database Files 3,306,971,879 Protein Prospector acc File 6,010,380 Protein Prospector acc File 110,905,168 Protein Prospector acc File 117,434,752 Protein Prospector idc File 3,264,792 Protein Prospector idc File 56,008,136 Protein Prospector idc File 59,272,928 Protein Prospector idi File 12 Protein Prospector idi File 12 Protein Prospector idi File 12 Protein Prospector idp File 3,264,792 Protein Prospector idp File 56,008,136 Protein Prospector idp File 59,272,928 Protein Prospector mw File 6,529,584 Protein Prospector mw File 112,016,272 Protein Prospector mw File 118,545,856 Protein Prospector pi File 6,529,584 Protein Prospector pi File 112,016,272 Protein Prospector pi File 118,545,856 Protein Prospector tax File 3,260,714 Protein Prospector tax File 62,047,517 Protein Prospector tax File 65,747,868 Protein Prospector tl File 107,027 Protein Prospector tl File 149,468 Protein Prospector tl File 176,928 Total Disk Space Requirement 222,168,525 Total Disk Space Requirement 3,622,921,220 Total Disk Space Requirement 3,845,969,007

uniprot_sprot.fasta uniprot_trembl.fasta Combined # entries (9.4.2024) 571,684 # entries (9.4.2024) 245,532,082 # entries (9.4.2024) 245,896,766 Size in Bytes Size in Bytes Size in Bytes Downloaded File (.gz) 93,179,359 Downloaded File (.gz) 61,346,322,649 Uncompressed Database File 285,491,239 Uncompressed Database File 119,839,928,596 Combined Database Files 120,125,419,835 Protein Prospector acc File 7,914,267 Protein Prospector acc File Protein Prospector acc File 4,952,710,728 Protein Prospector idc File 4,574,912 Protein Prospector idc File Protein Prospector idc File 1,967,174,128 Protein Prospector idi File 16 Protein Prospector idi File Protein Prospector idi File 16 Protein Prospector idp File 4,574,912 Protein Prospector idp File Protein Prospector idp File 1,967,174,128 Protein Prospector mw File 9,149,824 Protein Prospector mw File Protein Prospector mw File 3,934,348,256 Protein Prospector pi File 9,149,824 Protein Prospector pi File Protein Prospector pi File 3,934,348,256 Protein Prospector tax File 4,016,726 Protein Prospector tax File Protein Prospector tax File 2,348,124,376 Protein Prospector tl File 124,783 Protein Prospector tl File Protein Prospector tl File 267,818 Total Disk Space Requirement 324,996,503 Total Disk Space Requirement Total Disk Space Requirement 139,229,567,541

# entries (3.2.2009) 358,517 Size in Bytes Downloaded File (swissprot.gz) 99,494,763 Uncompressed Database File 186,889,757 Protein Prospector acc File 6,702,234 Protein Prospector idc File 2,868,136 Protein Prospector idi File 12 Protein Prospector idp File 2,868,136 Protein Prospector mw File 5,736,272 Protein Prospector pi File 5,736,272 Protein Prospector tax File 3,212,380 Protein Prospector tl File 106,959 Protein Prospector unk File 217 Total Disk Space Requirement 214,120,375

# entries (2.3.2009) 8,163,889 Size in Bytes Downloaded File (est_human.gz) 1,431,066,156 Uncompressed Database File 5,260,595,090 Protein Prospector acn File 65,311,112 Protein Prospector idc File 65,311,112 Protein Prospector idi File 12 Protein Prospector idp File 65,311,112 Protein Prospector tax File 72,363,911 Protein Prospector tl File 14 Total Disk Space Requirement 5,528,892,363

# entries (2.3.2009) 4,850,605 Size in Bytes Downloaded File (est_mouse.gz) 793,281,057 Uncompressed Database File 2,975,867,559 Protein Prospector acn File 38,804,840 Protein Prospector idc File 38,804,840 Protein Prospector idi File 12 Protein Prospector idp File 38,804,840 Protein Prospector tax File 42,544,368 Protein Prospector tl File 27 Total Disk Space Requirement 3,134,826,468

# entries (2.3.2009) 46,629,780 Size in Bytes Downloaded File (est_others.gz) 8,701,594,158 Uncompressed Database File 32,913,709,418 Protein Prospector acn File 373,038,240 Protein Prospector idc File 373,038,240 Protein Prospector idi File 12 Protein Prospector idp File 373,038,240 Protein Prospector tax File 455,205,333 Protein Prospector tl File 18,636 Total Disk Space Requirement 34,488,048,119

# entries (3.2.2009) 7,787,617 Size in Bytes Downloaded File (nr.gz) 1,845,189,406 Uncompressed Database File 4,239,632,306 Protein Prospector acn File 132,047,624 Protein Prospector idc File 62,300,936 Protein Prospector idi File 12 Protein Prospector idp File 62,300,936 Protein Prospector mw File 124,601,872 Protein Prospector pi File 124,601,872 Protein Prospector tax File 84,744,299 Protein Prospector tl File 1,983,235 Protein Prospector unk File 62,991 Protein Prospector unr File 19,824,736 Total Disk Space Requirement 4,852,100,819

# entries (6.2008) 13,676,588 Size in Bytes Downloaded File (rel166.fsa_aa.gz) 2,017,792,092 Uncompressed Database File 4,630,738,490 Protein Prospector acn File 109,412,704 Protein Prospector idc File 109,412,704 Protein Prospector idi File 12 Protein Prospector idp File 109,412,704 Protein Prospector mw File 218,825,408 Protein Prospector pi File 218,825,408 Protein Prospector tax File 127,386,258 Protein Prospector tl File 1,731,481 Protein Prospector unk File 26,617 Protein Prospector unr File 73,525,075 Total Disk Space Requirement 5,599,296,861

File Description Tag Size in Bytes Aaegypti_nr.seq Aedes aegypti from EnsEMBL ens 8,635 Agambiae_nr.seq Anopheles gambiae from EnsEMBL ens 7,474,716 Amellifera_nr.seq Apis mellifera from EnsEMBL ens 13,743,616 Btaurus_nr.seq Bos taurus from EnsEMBL ens 15,412,324 Cbriggsae_nr.seq Caenorhabditis briggsae from EnsEMBL ens 5,572,499 Celegans_nr.seq Caenorhabditis elegans from EnsEMBL ens 304,209 Cfamiliaris_nr.seq Canis familiaris from EnsEMBL ens 16,172,880 Cintestinalis_nr.seq Ciona intestinalis from EnsEMBL ens 10,745,415 Cporcellus_nr.seq Cavia porcellus from EnsEMBL ens 9,734,021 Csavignyi_nr.seq Ciona savignyi from EnsEMBL ens 12,428,143 Dmelanogaster_nr.seq Drosophila melanogaster from EnsEMBL ens 414,193 Dnovemcinctus_nr.seq Dasypus novemcinctus from EnsEMBL ens 9,816,788 Drerio_nr.seq Danio rerio from EnsEMBL ens 15,365,065 Ecaballus_nr.seq Equus caballus from EnsEMBL ens 15,443,676 Eeuropaeus_nr.seq Erinaceus europaeus from EnsEMBL ens 10,043,146 Etelfairi_nr.seq Echinops telfairi from EnsEMBL ens 11,047,074 Fcatus_nr.seq Felis catus from EnsEMBL ens 8,815,964 Gaculeatus_nr.seq Gasterosteus aculeatus from EnsEMBL ens 17,265,152 Ggallus_nr.seq Gallus gallus from EnsEMBL ens 12,988,589 Hsapiens_nr.seq Homo sapiens from EnsEMBL ens 8,789,424 Lafricana_nr.seq Loxodonta africana from EnsEMBL ens 10,406,380 Mdomestica_nr.seq Monodelphis domestica from EnsEMBL ens 23,137,550 Mlucifugus_nr.seq Myotis lucifugus from EnsEMBL ens 11,143,933 Mmulatta_nr.seq Macaca mulatta from EnsEMBL ens 21,669,491 Mmurinus_nr.seq Microcebus murinus from EnsEMBL ens 11,230,548 Mmusculus_nr.seq Mus musculus from EnsEMBL ens 8,208,552 Oanatinus_nr.seq Ornithorhynchus anatinus from EnsEMBL ens 15,580,576 Ocuniculus_nr.seq Oryctolagus cuniculus from EnsEMBL ens 10,189,512 Ogarnettii_nr.seq Otolemur garnettii from EnsEMBL ens 10,672,503 Olatipes_nr.seq Oryzias latipes from EnsEMBL ens 15,275,454 Oprinceps_nr.seq Ochotona princeps from EnsEMBL ens 10,970,832 Pberghei_nr.seq Plasmodium berghei ANKA from PlasmoDB plasmo 283,581 Pchabaudi_nr.seq Plasmodium chabaudi from PlasmoDB plasmo 190,435 Pfalciparum_nr.seq Plasmodium falciparum 3D7 from PlasmoDB plasmo 411,261 Pknowlesi_nr.seq Plasmodium knowlesi H from PlasmoDB plasmo 4,529,623 Ppygmaeus_nr.seq Pongo pygmaeus from EnsEMBL ens 14,226,824 Ptroglodytes_nr.seq Pan troglodytes from EnsEMBL ens 20,749,232 Pvivax_nr.seq Plasmodium vivax SaI-1 from PlasmoDB plasmo 4,384,825 Pyoelii_nr.seq Plasmodium yoelii 17XNL from PlasmoDB plasmo 59,354 Rnorvegicus_nr.seq Rattus norvegicus from EnsEMBL ens 17,694,720 Saraneus_nr.seq Sorex araneus from EnsEMBL ens 8,941,578 Scerevisiae_nr.seq Saccharomyces cerevisiae from EnsEMBL ens 23,957 Stridecemlineatus_nr.seq Spermophilus tridecemlineatus from EnsEMBL ens 10,168,502 Tbelangeri_nr.seq Tupaia belangeri from EnsEMBL ens 10,448,535 Tgondii_nr.seq Toxoplasma gondii from PlasmoDB plasmo 6,537,597 Tnigroviridis_nr.seq Tetraodon nigroviridis from EnsEMBL ens 31,802 Trubripes_nr.seq Takifugu rubripes from EnsEMBL ens 36,821,428 Xtropicalis_nr.seq Xenopus tropicalis from EnsEMBL ens 16,617,008 sludge_aus_nr.seq Australian sludge sludge 9,944,026 sludge_us1_nr.seq US sludge, Jazz Assembly sludge 4,961,736 sludge_us2_nr.seq US sludge, Phrap Assembly sludge 8,221,065 swiss_nr.seq SwissProt + updates sp 175,836,033 swiss_varsplic_nr.seq SwissProt splice variants sp_vs 17,359,720 trembl_nr.seq TrEMBL + updates tr 2,287,644,410 wormpep_nr.seq WormPep from the Sanger center wp 326,234 yeastpep_nr.seq Yeast ORFs from Stanford yp 139,418 nr_prot.tar.gz Compressed tarball of above files and

documentation1,311,126,484 nr_prot.tar Uncompressed tarball 3,006,679,040

# entries (3.2.2009) 312,942 Size in Bytes Downloaded File (owl.fasta.Z) 68,452,223 Uncompressed Database File 126,681,299 Protein Prospector acc File 5,278,314 Protein Prospector idc File 2,503,536 Protein Prospector idi File 12 Protein Prospector idp File 2,503,536 Protein Prospector mw File 5,007,072 Protein Prospector pi File 5,007,072 Protein Prospector tax File 2,540,928 Protein Prospector tl File 148,497 Protein Prospector unk File 289,799 Protein Prospector unr File 3,854,820 Total Disk Space Requirement 153,814,885 The UniProtKB database is made up from a concatenation of uniprot_sprot.fasta.gz and uniprot_trembl.fasta.gz for the directory ftp://ftp.ebi.ac.uk/pub/databases/uniprot/knowledgebase,

The Ludwignr database is a non-redundant database made up from several smaller databases contained in the directory ftp://ftp.ch.embnet.org/pub/databases/nr_prot. You need to download the ones you are interested in individually and then concatenate them together to make one file. The database files currently have a .seq suffix.

To do concatenation on the LINUX operating system you can use the cat command from the command line. For Windows you could install cygwin and use its cat command. Alternatively you could use the Windows copy command from a command window. Ie:

copy file1 + file2 + file3 DestFile copy *.seq FinalDatabase

-

Uncompress and rename the database files according to the format: UniProt.##, Genpept.##, Owl.##, SwissProt.##, NCBInr.##, dbEST.##, Ludwignr.##, IPI.##. The prefixes shown in italics (UniProt, Genpept, Owl, SwissProt, NCBInr, dbEST, Ludwignr or IPI) are a necessary part of the name, which allow the software to differentiate the specific dialect of the FASTA format comment line used in each database. You may also use the corresponding lowercase prefixes gen, owl, swp, ipi, nr, or dbest. They can also be used for a second database that is of the same format as the uppercase one. If you want to know more details, please read the FA-Index manual, particularly the filenaming sections.

-

Create indices in the seqdb directory for each database, by using the program. The indicies are necessary for preliminary filtering by species, protein MW and protein pI. FA-Index must be run after each update of a database, even if the update is done by only adding new entries to the end of the original file.

If you really want to know what FA-Index does and why, please read the manual. Don't even think about trying to use proprietary databases or update databases daily, UNLESS you read the FA-Index manual, particularly the generic database filenaming sections.

FA-Index will create a file with a .usp suffix (eg. Genpept.r95.usp) where it writes the comment line for each FASTA entry which the FA-Index program cannot parse out the species. Viewing this file can help troubleshoot FASTA format problems for anyone using proprietary databases.

The main Protein Prospector configuration file is info.txt. Although the parameters defined in this don't need to be defined in any particular order it is best to retain the order used in the distributed version of the file. This will make diagnosis easier if problems occur.

The parameters in info.txt are name-value pairs. A name-value pair is a line in the file where the name is followed by a space character and the rest the line is the value. The value may contain space characters. If just the name is specified then the value is assumed to be an empty string.

For example:

ucsf_banner false

Here ucsf_banner is the name and false is the value

Each parameter has a default value which is used if the parameter is missing from the file. When the parameters are listed below, the default value is listed after the parameter. In some cases the default value is an empty string. Sometimes it is not appropriate to use the default value.

If the parameter is a directory name it is permissable to use UNC paths for Windows systems.

Some of these parameters are relevant to all Protein Prospector installations whereas others are only relevant if the installation includes Batch-Tag searching.

name: seqdb default value: seqdb

This is the directory containing the sequence databases. It is almost always appropriate to specify this. In most cases it is best from a performance point of view to have the sequence databases on a separate disk partition and administrators need to make sure this is big enough for current and likely future needs. One reason for this is to stop the database files becoming fragmented.

The sequence database directory can be on a network drive and UNC paths are permitted. However this is not recommended.

If the several Prospector instances have been installed as a computing cluster then it is recommended that each of the cluster nodes has its own sequence database directory with identical copies of any databases used.

If the installation has both a UNIX and a Windows component it is possible to specify paths for both of these instances in the same file by using directives named:

name: seqdb_unix

and

name: seqdb_win

instead of:

name: seqdb

name: upload_temp default value: temp

The MS-Fit Upload and Batch-Tag Web forms both have an Upload Data From File option. When the file is first uploaded it is copied into the upload temporary directory.

By default the upload temporary directory is simply set to the temp directory in the Protein Prospector distribution. If you have the basic Protein Prospector package (without the Batch-Tag option) there is no particular reason to change this. The only relevant program is MS-Fit Upload and this program will delete the file as soon as it has extracted the relevant information from it.

If you are using the Batch-Tag Web program then any successfully uploaded files are copied to a user data repository from the upload temporary directory. Thus it may be appropriate to locate the upload temporary directory on the same disk partition or network drive as the user data repository.

If the installation has both a UNIX and a Windows component it is possible to specify paths for both of these instances in the same file by using directives named:

name: upload_temp_unix

and

name: upload_temp_win

instead of:

name: upload_temp

name: max_upload_content_length default value: 0

It is possible to restrict the size in bytes of any uploaded file via the max_upload_content_length parameter. If an uploaded file exceeds this length then the search will be rejected and no files will be generated on the system.

If this parameter is set to zero then the size of the uploaded file is not restricted by Protein Prospector. It may however be restricted by the web server software.

name: r_command default value:

The R statistics package is used for drawing some of the plots in the Protein Prospector output. In order for this to work the R package needs to be installed and the r_command parameter needs to contain the full path to the R exectutable file.

For a Windows system this might be:

r_command C:\Program Files\R\R-2.2.1\bin\R

For a LINUX system it could be:

r_command /usr/bin/R

If the r_command parameter is missing from the info.txt file then Protein Prospector assumes that R is not installed and the relevant plots will be missing from the reports.

If the installation has both a UNIX and a Windows component it is possible to specify paths for both of these instances in the same file by using directives named:

name: r_command_unix

and

name: r_command_win

instead of:

name: r_command

Protein Prospector creates temporary data files which the R statistics package uses to draw its plots (such as the error scatterplot in MS-Product). These are normally deleted after they are used. If you set the keep_r_data_file flag to 1 then these are retained in the temporary directory from which they are generally deleted after 2 days. This flag is only normally set for diagnostic purposes or if you want access to the data for any reason.

name: ucsf_banner default value: false

A black UCSF banner can be displayed at the top of the search forms and results pages. You can choose whether or not to display this based on the ucsf_banner parameter. Note that this parameter will not turn the banner on or off on static web pages. To do this you need to modify the html/js/info.js file.

It is possible to create log files when search forms are submitted to the server. These can be used to diagnose problems.

The log files are created in a subdirectory of the logs directory. The subdirectory is named after the date the search form was submitted. The date format is yyyy_mm_dd to enable easy sorting of the directories.

Each binary (eg mssearch.cgi, msform.cgi, etc) can write out a log file. This will contain some of the CGI environment variables, the process ID, the program start and end times and optionally the search parameters.

The log files can be automatically deleted after a specified period. For example to delete the log files after 7 days the following name-value pair should be specified:

delete_log_days 7

If the delete_log_days parameter is set to zero the log files are never deleted. This is the default situtation.

To write a log file for the mssearch.cgi binary which contains the basic logging information the following name-value pair should be specified:

mssearch_logging true

If you additionally want to record the parameters from the search form in the log file then you also need to specify the following name-value pair:

mssearch_parameter_logging true

The equivalent name-value pairs for msform.cgi and searchCompare.cgi are:

msform_logging true msform_parameter_logging true searchCompare_logging true searchCompare_parameter_logging true

The log files are in XML format. However as they are not valid XML files until the associated search has finished they are first created with a .txt suffix which changes to a .xml suffix at the end of the search. Thus a file with .txt suffix either represents a search that is in progress or one that has failed.

A typical log file name is:

mssearch_000107_4264.xml

Here mssearch is the program binary name, 000107 is the form submission date in hhmmss format and 4264 is the process id number.

Typical contents of a basic log file:

<?xml version="1.0" encoding="UTF-8"?> <?Tue Apr 01 00:01:07 2008, ProteinProspector Version 5.0.0?> <program_log> <pid>4264</pid> <start_time>Tue Apr 01 00:01:07 2008</start_time> <SCRIPT_NAME>/prospector/cgi-bin/mssearch.cgi</SCRIPT_NAME> <REMOTE_HOST></REMOTE_HOST> <REMOTE_ADDR>127.0.0.1</REMOTE_ADDR> <HTTP_USER_AGENT>Mozilla/5.0 (Windows; U; Windows NT 5.2; en-US; rv:1.8.1.13) Gecko/20080311 Firefox/2.0.0.13 </HTTP_USER_AGENT> <HTTP_REFERER>http://localhost/prospector/cgi-bin/msform.cgi?form=mspattern</HTTP_REFERER> <end_time>Tue Apr 01 00:01:46 2008</end_time> <search_time>39 sec</search_time> </program_log>

Typical contents a log file which also contains the search parameters:

<?xml version="1.0" encoding="UTF-8"?> <?Tue Apr 15 12:57:35 2008, ProteinProspector Version 5.0.0?> <program_log> <pid>1612</pid> <start_time>Tue Apr 15 12:57:35 2008</start_time> <SCRIPT_NAME>/prospector/cgi-bin/mssearch.cgi</SCRIPT_NAME> <REMOTE_HOST>127.0.0.1</REMOTE_HOST> <REMOTE_ADDR>127.0.0.1</REMOTE_ADDR> <HTTP_USER_AGENT>Mozilla/5.0 (Windows; U; Windows NT 5.2; en-US; rv:1.8.1.13) Gecko/20080311 Firefox/2.0.0.13 </HTTP_USER_AGENT> <HTTP_REFERER>http://localhost/prospector/cgi-bin/msform.cgi?form=msfitstandard</HTTP_REFERER> <parameters> <const_mod>Carbamidomethyl%20%28C%29</const_mod> <data>842.5100%0D%0A 856.5220%0D%0A 864.4733%0D%0A 870.5317%0D%0A 940.4754%0D%0A 943.4885%0D%0A 959.4934%0D%0A 970.4308%0D%0A 975.4785%0D%0A 1045.5580%0D%0A 1048.5716%0D%0A 1063.5712%0D%0A 1064.5892%0D%0A 1098.6185%0D%0A 1147.5876%0D%0A 1163.5996%0D%0A 1178.6280%0D%0A 1179.6014%0D%0A 1187.6316%0D%0A 1193.5461%0D%0A 1211.6607%0D%0A 1248.5664%0D%0A 1280.5561%0D%0A 1289.7670%0D%0A 1314.7019%0D%0A 1328.6521%0D%0A 1332.7121%0D%0A 1360.6820%0D%0A 1406.6617%0D%0A 1447.7010%0D%0A 1459.7311%0D%0A 1475.7471%0D%0A 1508.8107%0D%0A 1576.7986%0D%0A 1624.7649%0D%0A 1699.9255%0D%0A 1721.9134%0D%0A 1767.9147%0D%0A 1776.8961%0D%0A 1783.9077%0D%0A 1794.8293%0D%0A 1799.9017%0D%0A 1816.9798%0D%0A 1859.8805%0D%0A 2088.9872%0D%0A 2211.1046%0D%0A 2240.1851%0D%0A 2256.2412%0D%0A 2284.2079%0D%0A 2299.2019%0D%0A 2808.4450%0D%0A 3156.6352%0D%0A </data> <data_format>PP%20M%2FZ%20Charge</data_format> <data_source>Data%20Paste%20Area</data_source> <database>SwissProt.2007.12.04</database> <detailed_report>1</detailed_report> <dna_frame_translation>3</dna_frame_translation> <enzyme>Trypsin</enzyme> <full_pi_range>1</full_pi_range> <high_pi>10.0</high_pi> <input_filename>lastres</input_filename> <input_program_name>msfit</input_program_name> <instrument_name>ESI-Q-TOF</instrument_name> <low_pi>3.0</low_pi> <met_ox_factor>1.0</met_ox_factor> <min_matches>4</min_matches> <min_parent_ion_matches>1</min_parent_ion_matches> <missed_cleavages>1</missed_cleavages> <mod_AA>Peptide%20N-terminal%20Gln%20to%20pyroGlu</mod_AA> <mod_AA>Oxidation%20of%20M</mod_AA> <mod_AA>Protein%20N-terminus%20Acetylated</mod_AA> <mowse_on>1</mowse_on> <mowse_pfactor>0.4</mowse_pfactor> <ms_mass_exclusion>0</ms_mass_exclusion> <ms_matrix_exclusion>0</ms_matrix_exclusion> <ms_max_modifications>2</ms_max_modifications> <ms_max_reported_hits>5</ms_max_reported_hits> <ms_parent_mass_systematic_error>0</ms_parent_mass_systematic_error> <ms_parent_mass_tolerance>20</ms_parent_mass_tolerance> <ms_parent_mass_tolerance_units>ppm</ms_parent_mass_tolerance_units> <ms_peak_exclusion>0</ms_peak_exclusion> <ms_prot_high_mass>125000</ms_prot_high_mass> <ms_prot_low_mass>1000</ms_prot_low_mass> <msms_deisotope>0</msms_deisotope> <msms_join_peaks>0</msms_join_peaks> <msms_mass_exclusion>0</msms_mass_exclusion> <msms_matrix_exclusion>0</msms_matrix_exclusion> <msms_peak_exclusion>0</msms_peak_exclusion> <output_filename>lastres</output_filename> <output_type>HTML</output_type> <parent_mass_convert>monoisotopic</parent_mass_convert> <report_title>MS-Fit</report_title> <search_name>msfit</search_name> <sort_type>Score%20Sort</sort_type> <species>All</species> <user1_name>Acetyl%20%28K%29</user1_name> <user2_name>Acetyl%20%28K%29</user2_name> <user3_name>Acetyl%20%28K%29</user3_name> <user4_name>Acetyl%20%28K%29</user4_name> </parameters> <end_time>Tue Apr 15 12:57:46 2008</end_time> <search_time>11 sec</search_time> </program_log>

name: timeout default value: 0

The timeout parameter can be used to abort searches that have exceeded a given number of seconds. If this parameter is set to zero then search times are not restricted by Protein Prospector. They may however be restricted by the Web Server software. Note that Batch-Tag search times are never restricted by web server software as they are controlled by a search daemon.

name: max_msprod_sequences default value: 2

The max_msprod_sequences parameter controls the maximum number of.sequences that can be simultaneously entered into MS-Product. The value can be 1, 2 or 3.

name: max_msfit_peaks default value: 1000

The max_msfit_peaks parameter controls the maximum number of peaks (after peak filtering) that can be used in an MS-Fit search. If too many peaks are used the program can run out of memory.

name: msfit_max_reported_hits_limit default value: 500

The msfit_max_reported_hits_limit parameter controls the limit on the Maximum Reported Hits parameter in MS-Fit. If too many hits are reported the program can run out of memory and it can take a long time to generate the report.

name: faindex_parallel default value: false

Whether to create FA-Index files in series or in parallel. It this is set to true then FA-Index does 2 passes through the database. If it is set to false in does 5 passes through the database. Setting it to true is faster but uses more memory. Generally you should set only set this to true if you have a small amount of RAM and very large databases.

name: viewer_repository default value:

Root repository directory for the MS-Viewer spectral viewer program. If blank the results/msviewer directory is used. All data uploaded and saved by users of the MS-Viewer program is stored here. Note that until the data is saved in the repository it is only saved in a temporary directory for around 2 days.

name: site_db_dir default value:

If modification site databases are required a directory needs to be specified to hold these. As site databases are SQLite files it is important that the directory specified is not on a network filesystem, particularly not an NFS filesystem. Within the site database directory there should be a subdirectory for each FASTA database for which there is a site database. Eg if the FASTA database is called SwissProt.2016.9.6.fasta then the subdirectory should be called SwissProt.2016.9.6. Within this directory there should be subsequent subdirectories for each site database that there is. This subdirectory is used to hold the files from which the site database is created. An sqlite file is created for each of these subdirectories.

name: centroid_dir default value:

This is the root directory for the repository of centroided data. This directory will typically contain a subdirectory for each instrument for which you have centroided data. If you are using several computers in a cluster this parameter will typically be a directory accessible by all computers in the cluster (eg. a UNC directory on a Windows system).

Data which are uploaded to the server is stored in a separate repository for uploaded files which is organized by user.

name: raw_dir default value:

This is the root directory for the repository of raw data. This directory will typically contain the same subdirectories as the repository for centroided data. If you are using several computers in a cluster this parameter will typically be a directory accessible by all computers in the cluster (eg. a UNC directory on a Windows system).

If the installation has both a UNIX and a Windows component it is possible to specify paths for both of these instances in the same file by using directives named:

name: raw_dir_unix

and

name: raw_dir_win

instead of:

name: raw_dir

name: user_repository default value:

The repository for uploaded files is used to store search results files and project files along with data files which are uploaded using the Batch-Tag Web program. Every user has a separate directory where this information is stored. The user_repository parameter is used to specify the root directory of this repository. If you are using several computers in a cluster this parameter will typically be a directory accessible by all computers in the cluster (eg. a UNC directory on a Windows system).

If the installation has both a UNIX and a Windows component it is possible to specify paths for both of these instances in the same file by using directives named:

name: user_repository_unix

and

name: user_repository_win

instead of:

name: user_repository

name: multi_process default value: false

Protein Prospector can optionally use MPICH2 to make use of multiple processors and hence speed up Batch-Tag searches. If you are using this then the multi_process parameter should be set to true.

name: msms_max_spectra default value: 500

When doing Batch-Tag searching this is the maximum number of spectra that a single process deals with in a pass through the database. If the MPI option is used then a single search will use multiple processes. Thus the number of passes through the database that are required depends on this parameter, on the number of spectra in the dataset and the number of processes that MPI has been set up to use.

name: min_xlink_spectra default value: 4

When doing a Batch-Tag crosslink search the usual number of spectra searched in a group is overridden as more hits are saved. The value stored as msms_max_spectra (typically 500) is multiplied by the ratio "Maximum Report Hits"/"# Saved Tag Hits" (both these are from the search form) to get the size of the group. If this value is less than min_xlink_spectra then a value of min_xlink_spectra is used.

name: duplicate_scans default value: false

When data files are uploaded using the Batch-Tag Web program they are converted to MGF format before being stored in the upload repository. Generally, when there is no precursor charge specified in the centroid file then the Precursor Charge Range option on the Batch-Tag Web form is used to supply the charges and the MGF file created doesn't contain charge information. If the duplicate_scans parameter is set to true then the MGF file that is created will contain duplicate peak lists for every charge from the Precursor Charge Range and the corresponding charge information will be placed in the MGF file. mzXML files often don't have charge information stored in the file.

name: mpi_run default value:

If MPICH2 is being used to allow parallel Batch-Tag searches on a Windows platform the mpi_run parameter needs to contain the full path to the mpiexec exectutable file. This parameter is only relevant if the multi_process parameter is set to true.

A typical definition could be:

mpi_run C:\Program Files\MPICH2\bin\mpiexec.exe

On LINIX installations this is dealt with by the PATH environment variable so this parameter is ignored.

name: mpi_args default value:

mpi_args contains the command line arguments used by mpiexec when using MPICH2 to run a parallel Batch-Tag search. This parameter is only relevant if the multi_process parameter is set to true.

A typical definition could be:

mpi_args -n 3 -localroot

This parameter is ignored on LINIX installations where the Perl script cgi-bin/mssearchmpi.pl is used instead.

name: min_password_length default value: 0

Users have to log in to do Batch-Tag Searching and to view the results in Search Compare. When creating a new user a password has to be selected. The min_password_length is the minimum number of characters that a password can contain. If this is set to 0 the password field can be left blank if the user doesn't want to protect their data with a password.

These are the parameters that Protein Prospector uses to log into the Batch-Tag Daemon mySQL database.

name: db_host default value: localhost

db_host is the computer on which the database resides. If you have several instances of Prospector installed on a computer cluster then this needs to be set to the computer where the database has been installed.

name: db_port default value: 0

db_port is the port used to access the database. If the default value of 0 is used then the default mySQL port is used.

name: db_name default value: ppsd

db_name is the database name. You can have more than one database but only one can be used at a time. Generally you should only change this parameter if you know what you are doing.

name: db_user default value: prospector name: db_password default value: pp

db_user and db_password are the user name and password used to log into the database. These parameters are set when the Protein Prospector package is installed. A random password is selected at this time.

These parameters define the user name and password that Protein Prospector uses to log into the Batch-Tag Daemon mySQL database.

name: btag_daemon_name default value (Windows): btag_daemon default value (UNIX): btag-daemon

For Windows this parameter defines the name of Batch-Tag Daemon service whereas for UNIX it defines the name of the Batch-Tag Daemon binary.

The only reason for changing this is if you have more than one instance of Protein Prospector installed on the same computer. In this case the daemons would have to have different names.

name: btag_daemon_remote default value: false

Protein Prospector will normally try to start the Batch-Tag Daemon if you submit a Batch-Tag search and it isn't running. If you set btag_daemon_remote to true then the daemon is assumed to be running on a remote computer so no attempt is made to start it. This makes it possible to set up one computer as a web server and some other computers as compute nodes. These don't even need to have the same Operating Systems running on them. Thus you could have a Windows Web Server that can deal with quantitation and LINUX compute nodes.

name: max_btag_searches default value: 1

This is the maximum number of Batch-Tag searches that can run at one time on the current computer. If more searches are submitted then they will be placed in a queue. If you want to stop the daemon for any reason but want to make sure any ongoing searches complete you can temporarily set this parameter to 0.

name: max_jobs_per_user default value: 1

This is the maximum number of Batch-Tag searches that can run at one time on the current computer by a single user. If a server has a lot of users you could set this to 1 to prevent a single user taking up all the search slots. It will also ensure that any given search by a user will finish more quickly as their individual searches won't be competing with each other.

name: email default value: false

If this parameter is set to true Protein Prospector attempts to send an email to the user once a search has either completed or has been aborted. The computer has to be set up to send email for this to work.

name: server_name default value: localhost name: server_port default value: 80 name: virtual_dir default value:

These parameters are used to create the URL for running Search Compare when users are sent an email after a Batch-Tag search has finished.

For example for a results retrieval URL of:

http://prospector.ucsf.edu/prospector/cgi-bin/msform.cgi?form=search_compare&search_key=Md7XxQhUQ4R7HQ9i

The following parameters would need to be defined:

server_name prospector.ucsf.edu virtual_dir prospector

http://prospector.ucsf.edu:8888/prospector/cgi-bin/msform.cgi?form=search_compare&search_key=Md7XxQhUQ4R7HQ9i

would require:

server_name prospector.ucsf.edu server_port 8888 virtual_dir prospector

name: job_status_refresh_time default value: 5

After a Batch-Tag search is submitted a Job Status page is displayed which reports on the progress of the job. By default the information is updated every 5 seconds. You can change the update rate by changing the job_status_refresh_time parameter.

name: daemon_loop_time default value: 5

The daemon_loop_time is the time the Batch-Tag Daemon sleeps between the times when it checks if it has anything to do. The default value for this parameter is 5 sec.

name: single_server default value: false

If this is set to false then searches take longer and longer to start the more searches are running. This is to ensure load balancing if there are multiple daemons running on multiple servers. If you have a single server you should set this to true, particularly if it has a lot of processors.

name: aborted_jobs_delete_days default value: 0

Information on aborted searches is kept in a database table. You can delete this information after a certain time via the aborted_jobs_delete_days parameter. If the default value of 0 is used then the information is not deleted.

name: session_delete_days default value: 0

Every time a user logs into Protein Prospector an entry is added to a table in the Batch-Tag search database. A key into the table is stored in a cookie in the user's browser which is deleted once the user closes the browser. The entries can be deleted from the database after a time controlled by the session_delete_days parameter. Once the entry has been deleted from the database then the user will have to log in again whether or not they have closed the browser. If the default value of 0 is used then the entries are never deleted from the table. A value of 2 is recommended for this parameter.

name: preload_database default value: none defined

The Batch-Tag Daemon can load sequence databases into a memory mapped file which the database search programs can access. Multiple databases can be preloaded in this way.

For example:

preload_database SwissProt.2007.12.04 preload_database NCBInr.11.Dec.2007

would preload the SwissProt.2007.12.04 and NCBInr.11.Dec.2007 database into memory mapped files.

The following parameters can be modified whilst the daemon is running.

email server_name server_port virtual_dir max_btag_searches daemon_loop_time session_delete_days aborted_jobs_delete_days

On Windows systems the file just needs to be saved for it to be reread. Thus you need to be careful when saving the file that there are no errors in it.

On LINUX systems, after saving the file, you also need to send a HUP signal to the btag-daemon process. Ie:

kill -HUP pid

where pid is the process ID of the btag-daemon process.

name: join_results_files default value: true

When Batch-Tag is doing a multi-process search it stores the results for each process is a separate file. For example if the search key is YnX4ZKu8ZOd3vdvJ and there are 8 processes running the files will be called:

YnX4ZKu8ZOd3vdvJ.xml_0 YnX4ZKu8ZOd3vdvJ.xml_1 YnX4ZKu8ZOd3vdvJ.xml_2 YnX4ZKu8ZOd3vdvJ.xml_3 YnX4ZKu8ZOd3vdvJ.xml_4 YnX4ZKu8ZOd3vdvJ.xml_5 YnX4ZKu8ZOd3vdvJ.xml_6 YnX4ZKu8ZOd3vdvJ.xml_7

If join_results_files is set to true the files will be joined together at the end of a search into a single file called:

YnX4ZKu8ZOd3vdvJ.xml

If join_results_files is set to false then the files are not joined together. This could be potentially beneficial if there are a very large number of processors in which case joining the files together could be a significant proportion of the search time.

name: expectation_search_first default value: false

When a Batch-Tag search is performed a search is sometimes done against a random database to determine coefficients for expectation value calculations. This can either be done before the normal search or after it. If it is done after the normal search then it is possible to quickly estimate the length of a search which could then be automatically aborted if it was going to take too long. Doing the expectation value search first has been left as an option so that in the future a facility for viewing the results of partially completed searches can be added.

name: raw_data_forwarding default value: false

Any Protein Prospector programs that access raw data files, either to display the raw data or to do quantitation, needs to run on a computer running Windows. If the parameter raw_data_forwarding is set to true then in such cases the name of the server binary will have RawData appended to it (eg. searchCompareRawData.cgi) to allow the request to be forwarded to a Windows server. Note that the Protein Prospector instance running on the Windows server will also need to have the raw_data_forwarding parameter set.

Some directives will also be required in the apache setup file. In the example shown below server2 is the server with the Windows version of Protein Prospector. Note that all requests to the msdisplay binary need to be forwarded to the Windows server.

RewriteCond %{REQUEST_URI} ^.*RawData.cgi

RewriteRule ^/(.*)RawData.cgi http://server2/$1.cgi [P]

RewriteCond %{REQUEST_URI} ^.*msdisplay.cgi

RewriteRule ^/(.*)/msdisplay.cgi http://server2/$1/msdisplay.cgi [P]

Also see the virtual_dir_proxy parameter below.

If the server has to deal with a lot of quantitation traffic it is possible to have multiple quantitation servers. The Apache file directives and a Perl script to set this up are shown below. The Perl script gets started up when the Apache server starts. It then serves requests to the quantitation servers in turn.

Apache setup file directives:

RewriteEngine on

RewriteLog "/var/log/apache2/rewrite.log"

RewriteLogLevel 5

Force TRACE requests to return errors

# Needed for port 80 reconnects at UCSF

RewriteCond %{REQUEST_METHOD} ^TRACE

RewriteRule .* - [F]

RewriteMap lb prg:/var/lib/prospector/bin/load_balance.pl

RewriteCond %{REQUEST_URI} ^.*RawData.cgi [OR]

RewriteCond %{REQUEST_URI} ^/prospector\d [OR]

RewriteCond %{REQUEST_URI} ^.*msdisplay.cgi

RewriteRule ^(.+)$ ${lb:$1} [P,L]

Perl script:

use strict; # Don't buffer output. $| = 1; # The pool of possible servers for the round-robin. my @servers = ( "munch01", "munch02" ); my $domain = "ucsf.edu"; my $server = ""; my $range = scalar @servers; my $count = 0; my $uri = ""; while ( $uri =) { $count = ( ($count + 1) % $range ); # Assign the server by round-robin $server = $servers[$count] . ".ucsf.edu"; # Additional rewrites (instead of doing them in Apache). $uri =~ s/RawData.cgi/.cgi/; if ( $uri =~ /^\/prospector(\d)/ ) { $server = "munch0$1"; $uri =~ s/^\/prospector\d/\/prospector/; } print "http://$server/$uri"; }

name: virtual_dir_proxy default value:

This is required by systems where there is a proxy server and 1 or more servers behind it running instances of Protein Prospector. Some parts of the Prospector require a full address to be written into the output for this to work. For example something like:

<img src="/prospector/temp/Jul_27_2009/imageskk.11.png" />

is normally written into the output to display an image from the R package, such as the error scatterplot from MS-Product.

If we set virtual_dir_proxy to prospector1 then this server will write:

<img src="/prospector1/temp/Jul_27_2009/imageskk.11.png" />

instead.

A rewrite rule in the apache setup file can then be used to change this into a full address so that the proxy server will find the file.

RewriteCond %{REQUEST_URI} ^/prospector1/.*

RewriteRule ^/prospector1/(.*) http://prospector1.ucsf.edu/prospector/$1 [P]

After v6.0.0 it became possible to interact with a raw data extraction daemon on a Windows server rather than forwarding reports requiring raw data to a server with Prospector installed. The Windows server can be set up to queue extraction requests and Search Compare does not need to be running whilst the extraction is taking place. Once the extraction request has completed the report is accessible from a Search Table link.

name: raw_data_batch_option default value:

This controls the processing of raw data. If this is undefined then the raw data processing is built into Search Compare, MS-Display and MS-Product. Setting the flag to "optional" means that there is an optional menu for turning this on and off on Search Compare. MS-Display and MS-Product have inbuilt raw data processing. Setting the flag to "on" indicates that all raw data processing is handled by a daemon.

name: quan_remain_time_measurement_point default value: 30

quan_remain_time_measurement_point is used in conjunction with the quan_remain_time_no_abort_limit defined below. It is the time elapsed before the remaining quantitation extraction time is measured. This is to allow a more accurate estimation of the extraction time.

name: quan_remain_time_no_abort_limit default value: 0

If the remaining quantitation extraction time is greater than quan_remain_time_no_abort_limit then Search Compare is automatically aborted and the report is replaced by the Search Table. The Search Compare report is accessible from the Search Table once the raw data extraction has taken place.

If quan_remain_time_no_abort_limit is set to 0 then Search Compare is not automatically aborted.

name: quan_wait_time_no_abort_limit default value: 0

If the quantitation extraction is queued (before extraction) for longer than quan_wait_time_no_abort_limit then Search Compare is automatically aborted and the report is replaced by the Search Table. The Search Compare report is accessible from the Search Table once the raw data extraction has taken place.

If quan_wait_time_no_abort_limit is set to 0 then Search Compare is not automatically aborted.

The file html/js/info.js controls some aspects of what is displayed on static web pages such as the home page mshome.htm. There are some variables near the top of the file that can be modified.

pubWebServer

If pubWebServer is set to false then the links to FA-Index on static web pages are not shown.

batchMSMSSearching

If batchMSMSSearching is set to false then all the links in the Batch MSMS Searching section of the home page are not shown.

sciexAnalystRawData

If sciexAnalystRawData is set to false then the link to Wiff Read on the home page is not shown.

ABITOFTOFRawData

If ABITOFTOFRawData is set to false then the link to Peak Spotter on the home page is not shown.

ucsfBanner

If ucsfBanner is set to false then the black UCSF area of the web page is not shown on static web pages.

feedbackEmail

The feedbackEmail variable is used to control the email address that users are prompted to send queries to.

See also:Clearing the Cache When Updating Javascript Files

The database accession number in the search results has a HTML link to retrieve the complete entry including comments from a remote database. In order for this link to be created the programs need to know the URL for the remote database. This is accomplished through parameters contained in the acclinks.txt file. Occasionally the URL's to the remote database may need to be updated, or new ones added for a new database. This requires editing of the acclinks.txt file.

Within the acclinks.txt file an entry for an HTML link from the accession number MUST contain 1 line:

The line must contain the following information:

- The prefix name for the database as listed in the HTML input page for each program. The prefix should be long enough to uniquely identify the database or set of databases you wish to refer to.

-

The URL to link to if the accession number for the entry is added to the end of the URL. The URL addition is internal to the programs and is expected to retrieve a fully annotated entry from a remote database.

Note that this link need not be to a sequence database. The link could be to whatever a Protein Prospector server administrator specifies.

Example:

Below is an example of the entry for UniprotKB in acclinks.txt:

UniProt http://www.pir.uniprot.org/cgi-bin/upEntry?id=

The lowercase prefixes gen, owl, swp, or nr are intended to be used for a second database that is of the same format as the uppercase one. See Linking for creating links into NCBI databases.

As mentioned above the prefix name can refer to a single database or a set of databases. For example if you have two user created databases called PA3_mouse and PA33_mouse, an entry in the acclinks.txt file of the form:

PA3 some_url_prefix

would give the databases the same accession number link. On the other hand entries of the following form:

PA3 some_url_prefix PA33 another_url_prefix

would give the databases different accession number links.

Protein Prospector server administrators who find improved options for links to publicly available databases are encouraged to send the modified parameter files to for inclusion in subsequent Protein Prospector releases.

The upidlinks.txt file contains the remote database URL definitions from gene names in the Protein Prospector results pages. Currently gene names are only reported in the Search Compare output.

The instructions for modifying this file are essentially the same as those for modifying the acclinks.txt file.

Some example are given below:

SwissProt http://www.pir.uniprot.org/cgi-bin/upEntry?id= swp http://www.pir.uniprot.org/cgi-bin/upEntry?id= UniProt http://www.pir.uniprot.org/cgi-bin/upEntry?id=

The MS-Digest index number in the search results has an HTML link to retrieve an MS-Digest listing for the matched database entry. In order for this link to be created the programs need to know the URL to MS-Digest and some default parameters. This is accomplished through information contained in the idxlinks.txt file. A server administrator can customize these parameters by editing the idxlinks.txt file.

Within the idxlinks.txt file an entry for an HTML link from the MS-Digest index number MUST contain 2 lines:

The lines must contain the following information:

- The program name for which the specified HTML link will be created from the index number link in the program's output.

-

The URL to link to if the enzyme, MS-Digest index number, and modified AA parameters (from MS-Fit only) for the entry are added to the end of the provided URL. The URL addition is internal to the programs and is expected to provide an MS-Digest listing for the database entry corresponding to the index number.

Note that this link need not be the same for each Protein Prospector program creating the link, and that the MS-Digest parameters can be customized. Furthermore, this link need not be to MS-Digest at all; the link could be to whatever a Protein Prospector server administrator specifies.

Example:

Below is an example of the entries for msfit and mstag in idxlinks.txt:

msfit MSDIGEST? mstag MSDIGEST?mod_AA=Peptide+N-terminal+Gln+to+pyroGlu&mod_AA=Oxidation+of+M&mod_AA=Protein+N-terminus+Acetylated

The items on the taxonomy menu are controlled by the files taxonomy.txt and taxonomy_groups.txt. You can edit these file to add to or modify the available options.

If the taxonomy you want to add is contained in either taxonomy/names.dmp or taxonomy/speclist.txt then you can add it to the taxonomy.txt file. It is recommended that you use capital letters.

The taxonomy_groups.txt file can deal with more complex definitions. Within this file a single taxonomy entry must contain at least ONE line and individual entries are separated by a line with only the ">" symbol.

The first line of an entry contains the taxonomy name as it is to appear on the Taxonomy menu. All other lines should contain taxonomies that are available in either taxonomy/names.dmp or taxonomy/speclist.txt.

Some examples are listed below. Most of these were introduced to give backwards compatability with previous versions of Protein Prospector.

Grouping two or more species.

HUMAN MOUSE HOMO SAPIENS MUS MUSCULUS >

Groups of two or more taxonomies.

ROACH LOCUST BEETLE ROACHES GRASSHOPPERS AND LOCUSTS BEETLES >

Defining your own name for something that is a valid taxonomy option. In this case RODENTS is valid but RODENT isn't.

RODENT RODENTS >

Other examples:

MICROORGANISMS 'FLAVOBACTERIUM' LUTESCENS [BREVIBACTERIUM] FLAVUM [POLYANGIUM] BRACHYSPORUM ABIOTROPHIA DEFECTIVA ACARYOCHLORIS MARINA ACARYOCHLORIS MARINA MBIC11017 ACETIVIBRIO CELLULOLYTICUS ACETIVIBRIO ETHANOLGIGNENS ACETOBACTER ACETI ACETOBACTER ESTUNENSIS ..... ..... ZYMOMONAS MOBILIS ZYMOMONAS MOBILIS SUBSP. FRANCENSIS ZYMOMONAS MOBILIS SUBSP. MOBILIS ZYMOMONAS MOBILIS SUBSP. MOBILIS ATCC 10988 ZYMOMONAS MOBILIS SUBSP. MOBILIS ZM4 ZYMOMONAS MOBILIS SUBSP. POMACEAE >

Detailed information on all amino acids used in the programs is located on the server in the file aa.txt.

You can edit this file to change the attributes shown below. This is not recommended unless you know what you are doing.

An entry for an amino acid MUST contain 9 lines:

line 1) contains a name for the amino acid. This isn't currently used by the programs.

line 2) contains a single letter code for the amino acid.

line 3) contains the elemental formula of the amino acid.

lines 4) and 5) contain elemental formulae for side-chains that are used in

calculating d and w ions. If there are no beta

substituents, or they are irrelevant, then use 0

(zero) on these lines.

line 6) contains the pk_C_term for the amino acid.

line 7) contains the pk_N_term the amino acid.

line 8) contains the pk_acidic_sc for the amino acid. You should enter n/a for not applicable.

line 9) contains the pk_basic_sc for the amino acid. You should enter n/a for not applicable.

The pK values are taken from:

Bjellqvist, B., Hughes, G. H., Paquali, C., Paquet, N., Ravier, F., Sanchez, J.-C., Frutiger, S., Hochstrasser, D. (1993) The focusing positions of polypeptides in immobilized pH gradients can be predicted from their amino acid sequences. Electrophoresis, 1993, Pp. 1023-1031

Bjellqvist, B., Basse, B., Olsen, E. and Celis, J. E. (1994) Reference points for comparisons of two-dimensional maps of proteins from different human cell types defined in a pH scale where isoelectric points correlate with polypeptide compositions, Electrophoresis, Vol. 15, Pp. 529-539

Below is an example of the entry for Isoleucine:

Isoleucine I C6 H11 N1 O1 C1 H3 C2 H5 3.55 7.5 n/a n/a

Make sure the elements in your amino acid are present in the file elements.txt. See also, To Add/Change Elements.

It is not possible to add new amino acids. The ones currently defined are:

Alanine (A) Cysteine (C) Aspartic Acid (D) Glutamic Acid (E) Phenylalanine (F) Glycine (G) Histidine (H) Isoleucine (I) Lysine (K) Leucine (L) Methionine (M) Asparagine (N) Proline (P) Glutamine (Q) Arginine (R) Serine (S) Threonine (T) Valine (V) Tryptophan (W) Tyrosine (Y) Homoserine Lactone (h) Met Sulfoxide (m) Phosphorylated Serine (s) Phosphorylated Threonine (t) Phosphorylated Tyrosine (y) Selenocysteine (U)

The files usermod_frequent.txt, usermod_glyco.txt, usermod_msproduct.txt, usermod_quant.txt, usermod_silac.txt and usermod_xlink.txt contain the variable modifications used on the search forms. An administrator can add new modifications to these files or edit existing ones.

The file usermod_msproduct.txt is used to store modifications for use by MS-Product but not by MS-Tag, Batch-Tag, MS-Bridge, MS-Digest and MS-Fit. One major use of this file is to add new modifications for the MS-Viewer program. As these are often from other search engines they may not be appropriate for MS-Tag and Batch-Tag.

The file usermod_glyco.txt is used to store glycopeptide related modifications for use by MS-Product, MS-Viewer, MS-Tag, Batch-Tag, MS-Bridge, MS-Digest and MS-Fit.

The file usermod_silac.txt is used to store SILAC quantitation modifications for use by MS-Product, MS-Viewer, MS-Tag, Batch-Tag, MS-Bridge, MS-Digest and MS-Fit.

The file usermod_quant.txt is used to store non-SILAC quantitation modifications for use by MS-Product, MS-Viewer, MS-Tag, Batch-Tag, MS-Bridge, MS-Digest and MS-Fit.

The file usermod_xlink.txt is used to store crosslinking related modifications for use by MS-Product, MS-Viewer, MS-Tag, Batch-Tag, MS-Bridge, MS-Digest and MS-Fit. These modifications are referenced by the file links.txt.

Note that as of release 5.14.0 the file usermod.txt is no longer required. If you have rearranged all the modifications into the above categories the file must be removed. If it is present then the modifications contained in it will still be added to the menus but will be placed in an Unknown category. Modifications in the file should not be present in any of the other files.

For the file usermod_glyco.txt an entry for a variable modification MUST contain a single line. This contains a name for the modification followed by a bracketed list of amino acids to check for the modification. For modifications in this file the name must be made up from the following elements: "HexNAc", "NeuAcAc", "NeuGcAc", "NeuAc", "NeuGc", "Phospho", "Sulfo", "Galactosyl", "Glucosylgalactosyl", "+Formyl", "+Formyl", "Fuc", "Ac", "Hex", "Xyl" optionally followed by integer numbers. The elemental formulae corresponding to these elements are in the source code. "+Cation:K" and "+Cation:Na" mods are automatically generated for glycosylation modifications.

For the rest of the Usermod files an entry for a variable modification MUST contain 3 lines:

line 1) contains a name for the modification;

line 2) contains an elemental formula for the modification (elements

can be negative - eg Amidation would be N H O-1);

line 3) contains a list of amino acids/termini to check for the modification.

Although the software doesn't require it we suggest that the modifications are kept in the same order as the supplied file where the modifications names are in alphabetic order.

It is strongly recommended that you use names which follow the PSI_MOD standard for naming modifications. Also you should check the Unimod website to see if the modification you want to add already has a name. If you add a modification and either change the name or the elemental formula then all previous search results using this modification will be invalid and should be deleted.

Some examples of what line 3) can contain are:

1). Restricting the modification to the protein N or C terminus:

Protein N-term Protein C-term

2). Restricting the modification to one of a list of amino acids at the protein N or C terminus:

Protein N-term M

3). Modification to the peptide N or C terminus:

C-term N-term

4). Modification to one of a list of amino acids at the peptide N or C terminus:

N-term Q C-term M

5). Neutral loss modification:

Neutral loss

6). Modification to one of a list of amino acids:

STY

Below is an example of the entry for Phosphorylation of S, T and Y:

Phospho P O3 H STY

The list of possible constant modifications is generated automatically from the list of possible variable modifications. Note that as of release 5.14.0 glycocylation and cross-linking modifications are not added to the list of possible constant modifications.

The list of N-Terminus modifications the can form a1 and b1 ions is stored in the b1.txt file. The N-terminus modifications in this file have to have definitions in either usermod.txt, usermod_glyco.txt or usermod_xlink.txt

Some example entries are listed below:

Acetyl iTRAQ4plex iTRAQ8plex

Detailed information on all elements used in the programs is located on the server in the elements.txt file. You must edit this file to add or modify an element.

Within the elements.txt file an entry for an element MUST contain 1 line:

The line contains the following information:

a). The symbol for the element.

b). The valency of the element.

c). The number of isotopes listed on the line.

d). A mass/abundance pair for each isotope.

Below is an example of the entry for hydrogen:

H 1 2 1.007825035 .99985 2.014101779 0.00015

If you add a new element, please, send the modified parameter file to for inclusion in subsequent Protein Prospector releases.

Stable Isotope elements may also be added. For example:

2H 1 1 2.014101778 1.0 13C 4 1 13.003354838 1.0 15N 3 1 15.000108898 1.0 18O 2 1 17.999160419 1.0

The masses and isotopic abundances currently used are from:

Audi, G. and Wapstra, A. H. (1995) The 1995 update to the atomic mass evaluation, Nucl. Phys. A, Vol. 595, pp. 409-480 (1995)

Detailed information on all enzymatic digests used in the programs is located on the server in the enzyme.txt file. You must edit this file to add or modify the rules for an enzymatic digest.

Within this file an entry for an enzymatic digest MUST contain 4 lines:

line 1) contains a name for the enzymatic digest which will appear on the digest menu;

line 2) contains a list of cleavage amino acids;

line 3) contains a list of exception amino acids (a '-' character indicates no exceptions);

line 4) either C for cleavage on the C terminus side of an amino acid or N for cleavage

on the N terminus side.

Below is an example of the entry for Trypsin:

Trypsin KR P C

The file enzyme_comb.txt is used to specify enzyme combinations. You can combine the cleavage rules for two or more enzymes by having them on the same line in this file separated by a '/' character. For example to have an option which combines the cleavage rules for CNBr and Trypsin you would need the following line:

Trypsin/CNBr

The enzyme combinations will appear on the digest menu after the enzymes that have been defined in the enzyme.txt file.

Any enzyme used in the enzyme_comb.txt must have been defined in the enzyme.txt file.

It is possible to mix enzymes which cleave on the N-terminus side with those that cleave on the C-terminus side.

If you add a new enzymatic digest please send the modified parameter file to for inclusion in subsequent Protein Prospector releases.

The imm.txt file contains the immonium ion elemental formulae and corresponding compositional information for use by Protein Prospector programs.

The first 2 entries in the file are for the immonium tolerance and the minimum fragment ion mass (both in Da). This is followed by a list of immonium ions.

An entry for an immonium ion contains:

1). The elemental formula using elements defined in elements.txt.

2). The compositional information. List all the amino acids corresponding to the elemental formula.

3). Ions labelled as M are major peaks; these are used to include an amino acid when using immonium ions to extract compositional ions in MS-Tag and MS-Seq. Minor ions are labelled m and are only likely to be present alongside major ions. They are reported in the immonium and related ions section of the MS-Product report.

4). Use I if the ion is an immonium ion or - otherwise.

5). A list of amino acids to exclude if the mass is missing or a dash (-) character if there are no amino acids to exclude. Excluding amino acids on the basis of missing peaks is a feature that can be turned off.

The fields must be separated by the | character.

For example:

C2 H6 N O|S|M|I|- C4 H8 N|P|M|I|P C4 H8 N|R|M|-|- C4 H10 N|V|M|I|- C3 H8 N O|T|M|I|- C5 H10 N|KQ|M|-|- C5 H12 N|IL|M|I|IL C3 H7 N2 O|N|M|I|- C4 H11 N2|R|M|-|- C3 H6 N O2|D|M|I|- C4 H10 N3|R|m|-|- C5 H13 N2|K|M|I|- C4 H9 N2 O|Q|M|I|- C4 H8 N O2|E|M|I|- C4 H10 N S|M|M|I|- C5 H8 N3|H|M|I|H C5 H10 N3|R|M|-|R C8 H10 N|F|M|I|- C6 H8 N O2|P|M|-|- C6 H13 N2 O|K|m|-|- C5 H9 N2 O2|Q|m|-|- C8 H10 N O|Y|M|I|- C6 H8 N3 O|H|m|-|- C10 H11 N2|W|M|I|-

Any suggestion for improving this scheme should be sent to for inclusion in subsequent Protein Prospector releases.

Edit the fit_graph.par.txt file.

Edit the pr_graph.par.txt file.

Edit the sp_graph.par.txt file.

Edit the dbstat_hist.par.txt file.

Edit the hist.par.txt file.

Edit the error_hist.par.txt file.

Edit the mmod_hist.par.txt file.

Edit the cr_graph.par.txt file.

Many of the graphs in the package are HTML5/Javascript plots which use the information in their corresponding parameter file to control their appearance. Note that from release 5.16.0 onwards Java applets are no longer used to render graphical output.

The files contains comment lines (starting with a # character) explaining the parameter fields beneath them. The parameters are name-value pairs. A name-value pair is a line in the file where the name is followed by a space character and the rest the line is the value.

Colors are specified as 3 integers for the red, green and blue intensities respectively. The intensity values must be between 0 and 255.

A font specification is made up of a font family (Georgia, Palatino Linotype, Book Antiqua, Times New Roman, Arial, Helvetica, Arial Black, Impact, Lucida Sans Unicode, Tahoma, Verdana, Courier New or Lucida Console), a font style identifier (PLAIN, BOLD or ITALIC) and a point size.

The names of the parameters are shown in bold below:

- The graph width in pixels.

- applet_width

- The graph height in pixels.

- applet_height

- The width of the graph axes and the lines used to draw the graph in pixels.

- line_width

- The graph background color.

- applet_background_color_red

- applet_background_color_green

- applet_background_color_blue

- The graph axes color.

- axes_color_red

- axes_color_green

- axes_color_blue

- The default peak color.

- default_peak_color_red

- default_peak_color_green

- default_peak_color_blue

- The number of application colors - should be set to zero for MS-Isotope

- number_application_colors

- The application colors.

- application_color_1_red

- application_color_1_green

- application_color_1_blue

- application_color_2_red

- application_color_2_green

- application_color_2_blue

- etc

- The default font - the font for all text except the peak labels.

- default_font_family

- default_font_style

- default_font_points

- The peak label font.

- peak_label_font_family

- peak_label_font_style

- peak_label_font_points

- The X-Axis label.

- x_axis_label

Fragmentation types are stored in the file fragmentation.txt. The information corresponding to a fragmentation type consists of one or more lines in this file. Individual fragment type entries in the file are separated by a line with only the ">" symbol.

Note that only the score parameters (section 9 below) can be edited for the ESI-TRAP-CID-low-res, ESI-Q-CID and ESI-ETD-low-res instrument types.

The first line for an entry contains the fragmentation type name. This can be followed by lines (some optional) which override the default fragmentation type parameters. The additional lines have the form of name value pairs separated by a space. The possible parameters are listed below:

1). A list of fragment ions types (one per line) which occur in MS/MS fragmentation.

name: it

possible values: a

a-H2O

a-NH3

a-H3PO4

a-SOCH4

b

b-H2O

b-NH3

b+H2O

b-H3PO4

b-SOCH4

bp2 Doubly charged b ion for data where the charge can't be determined

from the peak list.

bp2-H2O

bp2-NH3

bp2-H3PO4

bp2-SOCH4

bp3 Triply charged b ion for data where the charge can't be determined

from the peak list. Currently only implemented for ESI-TRAP-CID-low-res

instrument.

c+2 Ion type to deal with incorrectly assigned monoisotopic peak

c+1 Ion type to deal with incorrectly assigned monoisotopic peak

c

cp2 Doubly charged c ion for data where the charge can't be determined

from the peak list.

c-1

x

y

y-H2O

y-NH3

y-H3PO4

y-SOCH4

yp2 Doubly charged y ion for data where the charge can't be determined

from the peak list.

yp2-H2O

yp2-NH3

yp2-H3PO4

yp2-SOCH4

yp3 Triply charged y ion for data where the charge can't be determined

from the peak list. Currently only implemented for ESI-TRAP-CID-low-res

instrument.

Y

z

zp2 Doubly charged z ion for data where the charge can't be determined

from the peak list.

z+1

z+1p2 Doubly charged z+1 ion for data where the charge can't be determined

from the peak list.

z+2 Ion type to deal with incorrectly assigned monoisotopic peak

z+3 Ion type to deal with incorrectly assigned monoisotopic peak

I Internal ions.

C C-ladder ions.

N N-ladder ions.

i Immonium and low mass ions.

m

d

v

w

h MH-H2O, b-H2O if b, b-H2O if y.

n a-NH3 if a, b-NH3 if b, y-NH3 if y.

B b+H2O if b.

P a-H3PO4 if a, b-H3PO4 if b, y-H3PO4 if y.

S b-SOCH4 if b, y-SOCH4 if y.

MH-H2O

MH-NH3

MH-H3PO4

MH-SOCH4

MH-SOCH4

M±x Eg. M-60, M-2, M+1. Used for ECD/ETD for labelling neutral loss peaks in MS-Product.

The losses specified here are also used by the msms_ecd_or_etd_side_chain_exclusion

parameter in the params/instrument.txt file.

The following ion types are possible in MS-Tag.

a,a-NH3,a-H2O,a-H3PO4,b,b-H2O,b-NH3,b+H2O,b-H3PO4,b-SOCH4,c+2,c+1,c,c-1,d bp2,bp2-H2O,bp2-NH3,bp2-H3PO4,bp2-SOCH4,cp2 x,y,y-NH3,y-H2O,y-H3PO4,y-SOCH4,Y,z,z+1,z+2,z+3 yp2,yp2-H2O,yp2-NH3,yp2-H3PO4,yp2-SOCH4,zp2,z+1p2 I,C,N,h,n,B,P,S

None are defined by default.